Running DeepSeek R1 Distilled Model on InHand AI Edge Computers

DeepSeek R1, an open-source AI model, is redefining efficiency and performance in the AI community. Its cutting-edge knowledge distillation technology transforms complex models into lightweight powerhouses, delivering top-tier inference capabilities. This combination of open-source accessibility and lightweight design is lowering the barriers to AI deployment and unlocking new possibilities for edge computing.

InHand Networks’ AI technology team just successfully deployed the DeepSeek R1 distilled model on the EC5000 series AI edge computers. This achievement validates the powerful potential of lightweight edge devices in AI inference tasks. Compared to traditional cloud-based deployments, edge AI computing eliminates the need for high-performance servers, enabling real-time inference in low-power environments. This makes AI solutions more flexible, secure, and efficient for applications such as industrial quality inspection, intelligent transportation, and telemedicine.

Running DeepSeek R1 Distilled Model on the EC5000 AI Edge Computers

With just a few steps, you can deploy the DeepSeek R1 distilled model on the EC5000 series edge computers:

Step 1: Install Nvidia’s Jetson Containers Toolkit

(This toolkit helps manage and deploy containerized AI applications efficiently.)

Run the following commands to download and install the Jetson Containers toolkit:

Step 2: Install Nvidia JetPack Toolkit

(JetPack provides essential drivers and libraries for running AI workloads on Jetson-powered devices.)

To install the JetPack toolkit, execute:

Wait for about one minute before next steps.

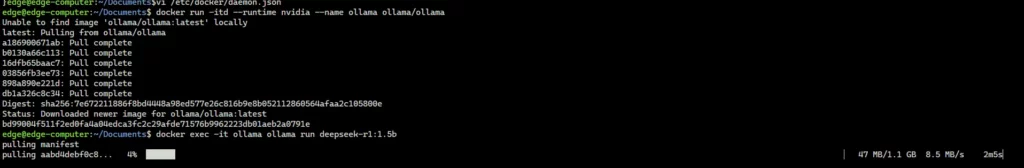

Step 3: Download and Run Ollama Container

docker run -itd --runtime nvidia --name ollama ollama/ollama

Step 4: Download and Run the DeepSeek R1 Distilled Model with Ollama

Reference: DeepSeek R1 Library

Select the appropriate DeepSeek R1 distilled model from Ollama’s library and install it automatically via the command line. For example, to run the DeepSeek-R1-Distill-Qwen-1.5B model, execute:

You can replace deepseek-r1:1.5b with any other available model name from Ollama’s search page.

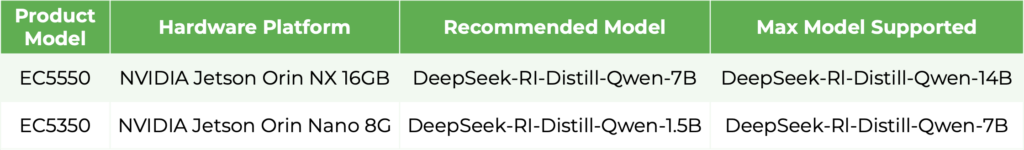

The table below shows the DeepSeek R1 distilled models supported by the EC5000 edge computers.

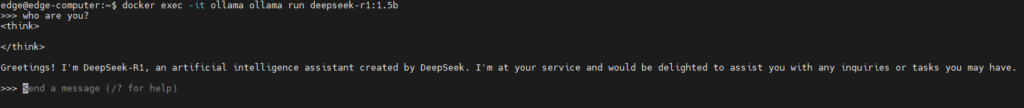

Interacting with the Model

Once the model is running, you can interact with it directly through the command line, enabling real-time queries and responses tailored to your specific AI applications.

Note: Replace “deepseek-r1:1.5b” with the model name you want to use, based on your specific requirements.

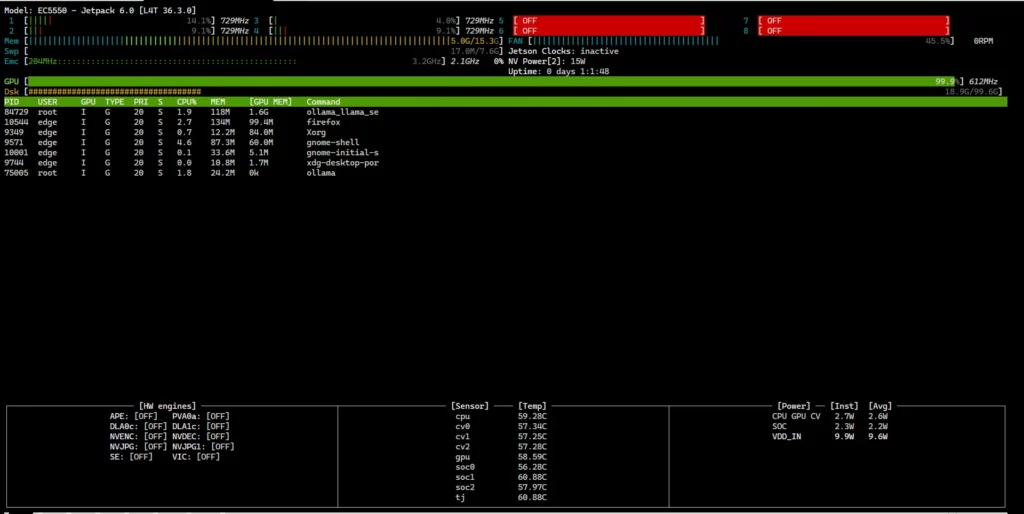

Monitoring EC5000 Hardware Utilization

To check the CPU, GPU, and memory usage of your EC5000 edge computer in real time, use the jtop command:

This will display the current hardware status of the device.

Important: The jtop command must be executed with root privileges.

Additional Notes

- In addition to the DeepSeek R1 distilled model, the EC5000 series edge computers also support other open-source large language models (LLMs), such as LLaMA 3.

- Running LLMs on EC5000 edge computers via Ollama is not the only method available—other deployment options can be explored based on your specific needs.

Deploying the DeepSeek R1 distilled model on the EC5000 series demonstrates the seamless integration of cutting-edge AI with edge computing hardware, paving the way for a new era of lightweight, high-performance edge AI.

As distillation technology continues to evolve, businesses can leverage these advancements to build private AI services, reducing computing costs while ensuring data security. This progress unlocks transformative opportunities across industries—from smart manufacturing and intelligent transportation to healthcare diagnostics and autonomous vehicles—enabling local data processing, reduced latency, enhanced data privacy, and real-time decision-making.

InHand Networks remains dedicated to advancing the edge intelligence ecosystem, empowering enterprises worldwide to embrace the future of intelligent edge computing.

Related Products: